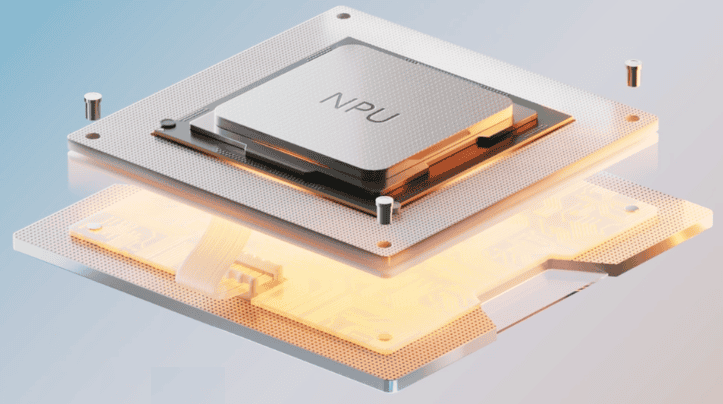

A Neural Processing Unit (NPU) is a specialized microprocessor designed specifically to accelerate machine learning tasks, particularly those related to deep learning and artificial intelligence (AI). Unlike traditional processors like CPUs (Central Processing Units) and GPUs (Graphics Processing Units), which handle a wide range of computing tasks, NPUs are optimized for performing the mathematical operations required for neural networks, such as matrix multiplications and convolutions.

Key Functions of an NPU

- AI and Deep Learning Tasks: NPUs excel at tasks like image recognition, natural language processing (NLP), and voice recognition.

- Efficient Parallel Processing: NPUs are designed to handle multiple data streams simultaneously, making them ideal for processing complex neural networks.

- Energy Efficiency: They typically consume less power compared to GPUs when executing AI tasks, making them suitable for mobile and embedded devices.

How Does an NPU Work?

NPUs are tailored to execute the specific mathematical operations that AI models rely on, such as tensor operations, convolutions, and activation functions. They use optimized data paths and parallel computing architectures that can process large amounts of data with lower latency.

NPU vs. CPU vs. GPU vs. SoC

Here is a detailed comparison of these processing units:

| Feature | NPU (Neural Processing Unit) | CPU (Central Processing Unit) | GPU (Graphics Processing Unit) | SoC (System on Chip) |

|---|---|---|---|---|

| Primary Function | Specialized for AI and deep learning tasks. | Specialized in AI and deep learning tasks. | Optimized for parallel processing, mainly for graphics and some AI tasks. | Integrated chip containing CPU, GPU, NPU, and other components. |

| Architecture | Highly parallel, optimized for AI-specific operations like matrix multiplications. | General-purpose architecture with fewer cores optimized for single-threaded performance. | Parallel architecture with many cores optimized for vector and pixel-based tasks. | Integrated architecture combining multiple processors, optimized for overall efficiency. |

| Power Efficiency | Highly energy-efficient for AI tasks. | Moderate power consumption. | High power consumption, especially for intensive tasks. | Generally power-efficient due to integration of multiple functions in a single chip. |

| Performance in AI Tasks | Best for deep learning and AI workloads; can run large models efficiently. | Can run AI models but less efficient and slower. | Can run AI models but less efficiently and slower. | Performance varies based on the components integrated into the SoC; can include a dedicated NPU. |

| Use Cases | AI applications like voice assistants, image recognition, NLP, autonomous systems. | AI applications like voice assistants, image recognition, NLP, and autonomous systems. | Graphics rendering, gaming, and some AI workloads. | Mobile devices, IoT devices, embedded systems where multiple processing functions are needed. |

| Scalability | Scalable for AI-heavy applications, especially in data centers. | Limited scalability for AI without specialized cores. | Scalable for both graphics and some AI tasks. | Mobile devices, IoT devices, and embedded systems where multiple processing functions are needed. |

The Role of NPUs in Modern Devices

NPUs are becoming more prevalent as AI becomes more integrated into our daily devices. Smartphones, for instance, now feature NPUs to enhance tasks like camera processing, voice recognition, and real-time translation. Autonomous vehicles, smart home devices, and even cloud data centers leverage NPUs to handle AI processing more efficiently.

The Growing Importance of NPUs

As AI and deep learning applications continue to expand, NPUs are expected to play a critical role in optimizing performance and power consumption in both consumer and enterprise applications. With their ability to efficiently handle large-scale neural networks, NPUs will likely become a standard feature in a wide range of devices, from smartphones to autonomous vehicles.

Conclusion

While CPUs and GPUs remain essential in general computing and high-performance tasks, NPUs are becoming the go-to solution for AI and deep learning workloads. By focusing on the unique requirements of neural networks, NPUs deliver better performance and energy efficiency, particularly in applications where AI is a core function.

Share Your Views: